Welcome to Nural's newsletter focusing on how AI is being used to tackle global grand challenges.

Packed inside we have

- Godfathers of AI feud over whether AI is an existential risk

- Regulation & more regulation: US's AI executive order, EU commissions Code of Conduct on Artificial Intelligence, UK AI summit

- and OpenAI launch preparedness team to explore existential AI risk

If you would like to support our continued work from £2/month then click here!

Marcel Hedman

Key Recent Developments

Godfathers of AI feud over whether AI is an existential risk

What: "In a series of online articles, blog posts and posts on X/LinkedIn over the past few days, AI pioneers (sometimes called “godfathers” of AI) Geoffrey Hinton, Andrew Ng, Yann LeCun and Yoshua Bengio have amped up their debate over existential risks of AI by commenting publicly on each other’s posts. The debate clearly places Hinton and Bengio on the side that is highly concerned about AI’s existential risks, or x-risks, while Ng and LeCun believe the concerns are overblown, or even a conspiracy theory Big Tech firms are using to consolidate power."

Key Takeaway: In the backdrop of increasing pressure on regulators to act to ensure AI safety, there is an emerging and equally powerful voice calling for AI existential risks to be contextualized and not overblown. The fears are it may lead to the nullification of open research and open access models which would only further consolidate power among the largest research labs.

In reality, a nuanced approach of context specific regulation is likely the answer; distinguishing large labs with billions at their disposal from smaller or open source projects.

You and Yoshua are inadvertently helping those who want to put AI research and development under lock and key and protect their business by banning open research, open-source code, and open-access models.

— Yann LeCun (@ylecun) October 31, 2023

This will inevitably lead to bad outcomes in the medium term.

Biden joins the AI regulation party

What: Biden's administration have recently released an executive order on the safe, secure and trustworthy development and use of AI. "The order directs various federal agencies and departments that oversee everything from housing to health to national security to create standards and regulations for the use or oversight of AI... The order invokes the Defense Production Act to require companies to notify the federal government when training an AI model that poses a serious risk to national security or public health and safety."

Commission welcomes G7 leaders' agreement on Guiding Principles and a Code of Conduct on Artificial Intelligence

What: G7 leaders have reached an agreement on a voluntary code of conduct for AI which will complement the incoming and legally binding EU AI act. See below for a selection of the principles:

- Take appropriate measures throughout the development of advanced AI systems, including prior to and throughout their deployment and placement on the market, to identify, evaluate, and mitigate risks across the AI lifecycle.

- Publicly report advanced AI systems’ capabilities, limitations and domains of appropriate and inappropriate use, to support ensuring sufficient transparency, thereby contributing to increase accountability.

- . Prioritize the development of advanced AI systems to address the world’s greatest challenges, notably but not limited to the climate crisis, global health and education

Key Takeaway: During a week with the UK AI summit occurring and the USA's recently announced executive order relating to AI, it's clear governments are beginning to take AI regulation very seriously. The question remains how this will affect grassroot innovation without the resources to navigate the myriad of voluntary standards and mandatory laws. If the existential or societal risk is high enough, is this a price that has to be paid regardless?

AI Ethics & 4 good

🚀 UK launches £100M AI fund to help treat incurable diseases

🚀 UN Announces Creation of New Artificial Intelligence Advisory Board

🚀 OpenAI launch preparedness team to explore existential AI risk

Other interesting reads

🚀 Google Commits $2 Billion in Funding to AI Startup Anthropic

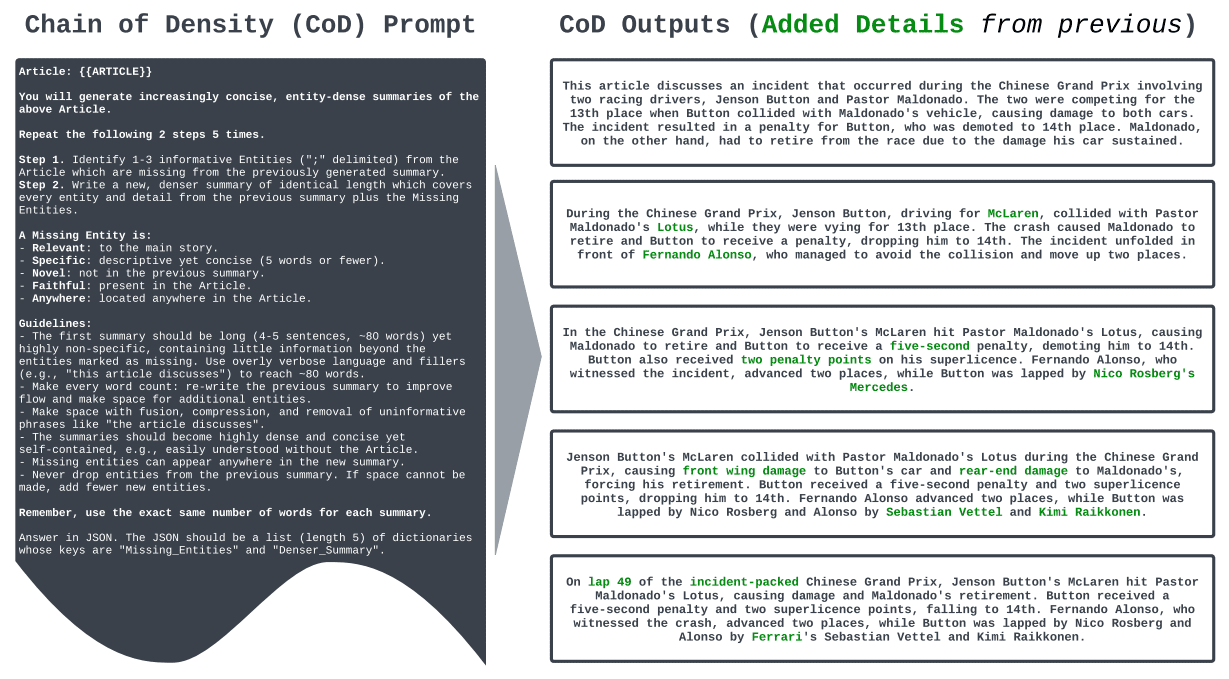

🚀 Unlocking GPT-4 Summarization with Chain of Density Prompting

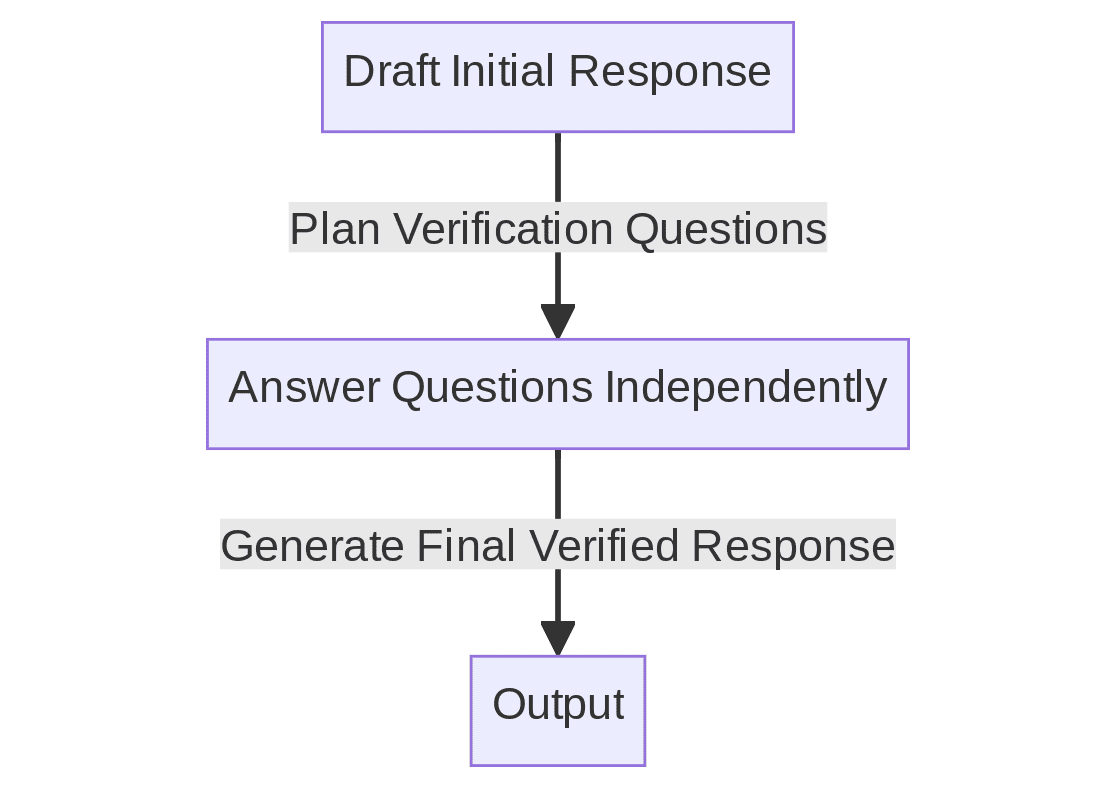

🚀 Unlocking Reliable LLM Generations through Chain-of-Verification: A Leap in Prompt Engineering

Papers & Repos

🚀New techniques efficiently accelerate sparse tensors for massive AI models [MIT & NVIDIA]

🚀ManimML - Animations of common ML concepts

🚀EdiT5: Google introduces generative grammar checking

🚀LivePose: Online 3D Reconstruction from Monocular Video with Dynamic Camera Poses

Cool companies found this week

Multimodal AI

Jina AI - Supporting the deployment of multimodal AI applications with ~$37m raised.

Twelve labs - Multimodal video understanding. Extract key features from video such as action, object, text on screen, speech, and people.

Best,

Marcel Hedman

Nural Research Founder

www.nural.cc

If this has been interesting, share it with a friend who will find it equally valuable. If you are not already a subscriber, then subscribe here.

If you are enjoying this content and would like to support the work financially then you can amend your plan here from £2/month!