Welcome to Nural's newsletter where you will find a compilation of articles, news and cool companies, all focusing on how AI is being used to tackle global grand challenges.

Our aim is to make sure that you are always up to date with the most important developments in this fast-moving field.

Packed inside we have

- DeepMind mathematics breakthrough

- Google and Cambridge propose universal transformer for image, video, and audio

- and, an AI-designed "living robot" replicates autonomously

If you would like to support our continued work from £1 then click here!

Graham Lane & Marcel Hedman

Key Recent Developments

DeepMind makes breakthrough to give AI mathematical capabilities never seen before

What: DeepMind collaborated with mathematicians to apply AI to discovering new insights in areas of mathematics. The approached used supervised learning, training algorithms with labeled data to predict outcomes. The research proposes a new approach to a longstanding conjecture in representation theory, and a previously unknown algebraic quantity in the theory of knots. The AI detects patterns in mathematics at a vast scale and in a huge dimensional space, augmenting the insights of mathematicians and proving proof of new theorems.

Key Takeaways: Besides augmenting the work of mathematicians, DeepMind also notes that knot theory has connections with quantum field theory and non-Euclidian geometry. In particular, how the relationships of algebra, geometry and quantum theory in the study of knots is an open question.

Paper: Exploring the beauty of pure mathematics in novel ways

Google and Cambridge propose a universal transformer for image, video, and audio classification

What: The neural network transformer architecture was first introduced in 2017 and had a profound impact in natural language processing, and the classification of images, video and audio. Until now, a separate model would be trained for each of these tasks. Researchers have now demonstrated a single transformer architecture co-trained on image, audio and video that is parameter-efficient and that generalises across multiple domains.

Key Takeaway: Transformer models have grown to huge sizes with an ever increasing financial cost and environmental impact. The demonstrated model achieves state of the art performance while being more compact. It does not increase training cost compared to training separate models and may reduce it. This is an example of research effort seeking to make existing models more efficient rather than creating ever-larger models.

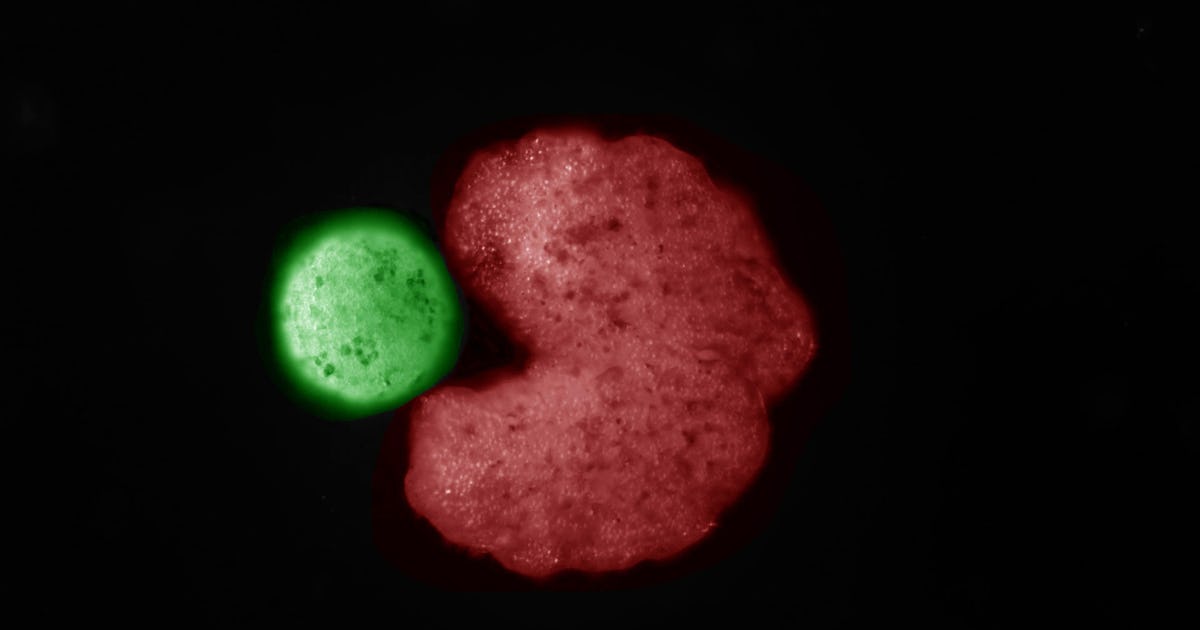

AI-designed "living robots" observed self-replicating

What: Xenobots are combinations of frog stem cells designed by AI into 3D structures and referred to as “living robots”. About the size of a grain of sand, they are constructed in the real world by humans. They can move around Petri dishes and push microscopic objects without external input. The xenobots have now been observed self-replicating in a manner previously unknown in nature. This reproductive process was further enhanced by tweaks to the AI design.

Key Takeaways: The authors speculate that this form for self-replication may have been essential in the origin of life. Furthermore, the authors note that the AI design methods can be applied to exaggerate existing behaviours and “in future, possibly guide them toward more useful forms”.

Paper: Kinematic self-replication in reconfigurable organisms

AI Ethics

🚀 Algorithmic transparency standard

The UK government is piloting a toolkit for public bodies to provide clear information about the algorithmic tools they use.

🚀 Timnit Gebru launches independent AI research institute on anniversary of ouster from Google

Distributed AI Research Institute will "create space for independent, community-rooted AI research free from Big Tech’s pervasive influence".

🚀 Software that studies your Facebook friends to predict who may commit a crime

LAPD associated with controversial AI that purports to discern people’s motives and beliefs by monitoring social media

Other interesting reads

🚀 AI finds superbug-killing potential in human proteins

For the first time, scientists have found antibiotic peptides within proteins that are unrelated to the human immune response

🚀 Researchers enhances predictive weather modeling with quantum machine learning

A real-world neural network was augmented with a quantum convolutional layer leading to improved weather predictions.

🚀 AI tool lets you visualize how climate change could affect your home

An AI-driven experience enabling users to imagine the environmental impacts of the climate crisis, one address at a time.

Cool companies found this week

Transport

Airspace Intelligence - Uses AI to suggest customised aircraft flight plans based on a complex set of variables, saving fuel and reducing carbon emissions. The company has raised an undisclosed sum in round A funding.

Observability

Whylabs.ai - recently launched AI Observatory, a self-serve SaaS Platform for monitoring data health and model health. The company has raised $10 million in round A funding from Andrew Ng's AI Fund and other partners.

Healthcare

Zeit Medica - produces a smart headband using AI to monitor electrical activity in the brain that can get help immediately if needed. The company raised $2 million in an over-subscribed seed funding round.

And Finally ...

Turn your portrait into an anime with AnimeGANv2

AI/ML must knows

Foundation Models - any model trained on broad data at scale that can be fine-tuned to a wide range of downstream tasks. Examples include BERT and GPT-3. (See also Transfer Learning)

Few shot learning - Supervised learning using only a small dataset to master the task.

Transfer Learning - Reusing parts or all of a model designed for one task on a new task with the aim of reducing training time and improving performance.

Generative adversarial network - Generative models that create new data instances that resemble your training data. They can be used to generate fake images.

Deep Learning - Deep learning is a form of machine learning based on artificial neural networks.

Best,

Marcel Hedman

Nural Research Founder

www.nural.cc

If this has been interesting, share it with a friend who will find it equally valuable. If you are not already a subscriber, then subscribe here.

If you are enjoying this content and would like to support the work financially then you can amend your plan here from £1/month!