Welcome to Nural's newsletter where you will find a compilation of articles, news and cool companies, all focusing on how AI is being used to tackle global grand challenges.

Our aim is to make sure that you are always up to date with the most important developments in this fast-moving field.

Packed inside we have

- Can Generative Flow Networks bridge the gap to human intelligence?

- Stanford's assessment of the state of AI

- and China regulates recommender algorithms

If you would like to support our continued work from £1 then click here!

Graham Lane & Marcel Hedman

Key Recent Developments

"I have rarely been as enthusiastic about a new research direction"

What: The above quote is from Joshua Bengio, one of the world-renowned “godfathers” of deep learning. His recent blog posting discusses Generative Flow Networks (GFlowNets), provides a number of useful resources, and promises “a volley of new papers”.

Generative Flow Networks combine elements of “reinforcement learning, deep generative models and energy-based probabilistic modeling”. They are capable of sequentially constructing complicated objects like molecular graphs, causal graphs or explanations of a scene. Whereas Reinforcement Learning maximises a single action, it is possible to train a policy for a Generative Flow Network so that the terminal states of the network - as a whole - match a desired probability function.

Key Takeaways: Bengio states clearly that the work is aimed at “bridging the gap between AI and human intelligence” by introducing features of higher-level cognition into deep learning networks in order to master causality and better generalisation to new or unusual scenarios. He has been developing these ideas for several years, describing that the real inspiration of this work is to model the "thoughts in our mind".

Stanford report shows that ethics challenges continue to dog AI field as funding climbs

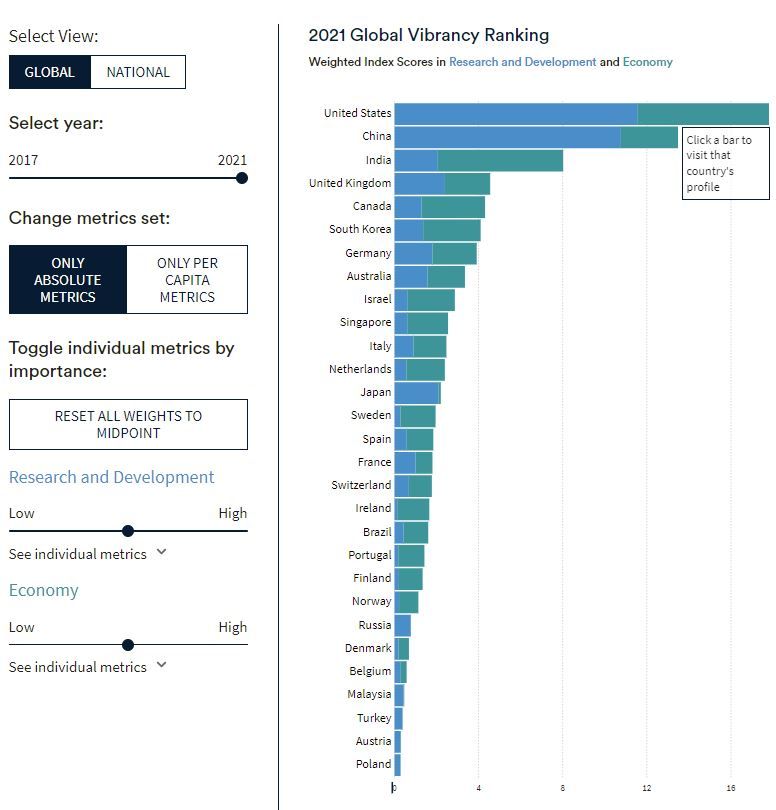

What: The fifth annual AI Index report from Stanford’s Institute for Human-Centered AI has been published. The report posits that 2021 was the point at which AI became a mature technology with widespread adoption and real-world impact. This wider deployment is leading to an increasing focus on ethical issues.

Key Takeaways:

Finance: Private investment in AI was about $93.5 billion in 2021, more than double the previous year, but the number of new companies receiving funding is decreasing year-on-year.

Technology: The time and cost of training models has decreased dramatically. The biggest companies are benefiting from using enormous, difficult-to-obtain AI training datasets. In 2021 nine of the top 10 state-of-the-art AI systems were trained with additional data.

Ethics: The impact of in-house ethics teams remains questionable. Meanwhile, a state-of-the-art language model in 2021 was 29% more likely to output toxic text compared to a smaller, simpler model in 2018. This suggests that the increase in toxicity corresponds with the increase in general capabilities.

China’s algorithm law takes effect to curb Big Tech’s sway in public opinion

What: A new regulation in China designed to rein in the use of recommendation algorithms in apps recently came into effect. Under the new regulations service providers using algorithms must “promote positive energy” and allow users to decline personalised recommendations. The regulations offer consumer protection in that secret knowledge of customers’ internet spending habits is not used to charge extra fees. There is also protection for gig economy workers against the excesses of management-by-algorithm. The regulations will support action against misinformation but can also be used by the authorities to clamp down on content recommendations which have the potential of “shaping public opinions” or “social mobilisation”.

Key Takeaways: Misinformation, hate speech, social fragmentation and the rights of gig economy workers are burning issues in many countries. China is an early mover in taking action but other countries will be watching and considering their own initiatives. At the moment the implementation of the regulations remains vague. How will algorithms be assessed and how will the intellectual property of companies be respected?

AI Ethics

🚀 Russia may have used a killer drone in Ukraine. Now what?

Russia has reportedly deployed “suicide drones” in Ukraine that can autonomously identify targets using AI, although it is not clear whether these have operated autonomously or under human control. A detailed Wired report about autonomous weapons speculates that “the situation unfolding in Ukraine shows how difficult it will really be to use advanced AI and autonomy”.

🚀 Ukraine has started using Clearview AI’s facial recognition during war

Ukraine is using controversial AI facial recognition software from Clearview to combat misinformation, to identify Russian combatants, prisoners and the dead. The Russian side does not have access to Clearview but almost certainly has similar capabilities. Identifying individuals in a war can be a double-edged sword. On the one hand, it may enable those guilty of human rights abuses to be brought to justice. On the other hand, it may expose individual soldiers and their families to reprisals

🚀 Facebook Oversight Board

This Twitter thread provides an insight into the ethics work of the Facebook Oversight Board

🚀 How artificial intelligence can help combat systemic racism

The author argues that we need to look beyond algorithm bias to an AI future where models more effectively deal with systemic inequality.

Other interesting reads

🚀 Will transformers take over AI?

This article provides a good overview of transformer models in AI (often associated with "foundation models" and "large language models"), why they are important and how they might be deployed in the future.

🚀 The FTC’s new enforcement weapon spells death to algorithms

The U.S. Federal Trade Commission has found an effective new enforcement mechanism: requiring companies that breach rules to delete algorithms, AI models, and data that has been collected improperly.

🚀 Using AI to support social interaction between children who are blind and their peers

The system helps blind children locate others in a room and also provides clues about when someone establishes "eye contact", which can be an opportunity to start a conversation.

🚀 It’s like GPT-3 but for code - fun, fast, and full of flaws

Nine months after its release, an in-depth look at real-world experience using the Copilot code completion tool, highlighting both impressive and frustrating results.

🚀 Molecular counterfactuals method helps chemists explain AI predictions

How to understand AI predictions? Firstly, predict a molecule with a certain property. Secondly, look for a broadly similar molecule that lacks that property. Thirdly, compare the two molecules to ascertain whether the difference between them provides a plausible explanation for the presence of the new property.

Cool companies found this week

Machine Intelligence

Numenta - is a team of scientists and engineers applying neuroscience principles to machine intelligence research. The aim is to reverse-engineer the neocortex and develop a blueprint that can be used to build machine intelligence technology. The company is co-founded by Jeff Hawkins the author of two well-known books: On Intelligence (2004) and A Thousand Brains: A New Theory of Intelligence (2021). The company has operated since 2005 and is privately held. It last received round D funding of $5 million in 2012.

Autonomous Vehicles

Autobrains - a company that seeks to “radically re-imagine autonomous driving” so that it will be adaptive to contextual changes. This will be achieved by AI self-learning rather than using the more common supervised AI (with labelled examples). The company has raised $120 million in round C funding.

AI supercomputers

Luminous Computing - A number of startups are developing “photonic chips” that operate using light rather than electricity. Theoretically this could lead to higher performance and greater efficiency thereby providing the increased power required to run future AI systems. The company has a mission to build “the most powerful, scalable AI supercomputer on Earth” and has raised $105 million in round A funding from backers including Bill Gates.

And Finally ...

Do you ever feel that you can't keep up?

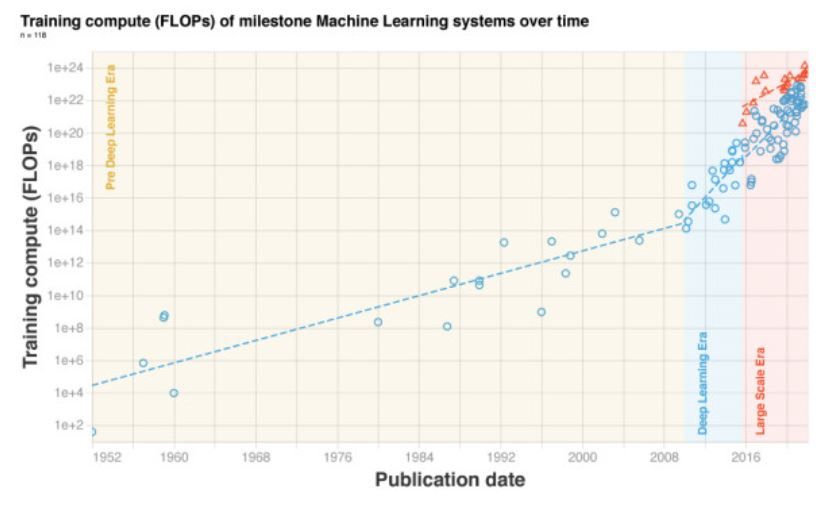

New research indicates that the power of AI systems is doubling every 6 months significantly out-stripping Moore's Law which sees computer processing power doubling every 14 months.

AI/ML must knows

Foundation Models - any model trained on broad data at scale that can be fine-tuned to a wide range of downstream tasks. Examples include BERT and GPT-3. (See also Transfer Learning)

Few shot learning - Supervised learning using only a small dataset to master the task.

Transfer Learning - Reusing parts or all of a model designed for one task on a new task with the aim of reducing training time and improving performance.

Generative adversarial network - Generative models that create new data instances that resemble your training data. They can be used to generate fake images.

Deep Learning - Deep learning is a form of machine learning based on artificial neural networks.

Best,

Marcel Hedman

Nural Research Founder

www.nural.cc

If this has been interesting, share it with a friend who will find it equally valuable. If you are not already a subscriber, then subscribe here.

If you are enjoying this content and would like to support the work financially then you can amend your plan here from £1/month!