Welcome to Nural's newsletter where you will find a compilation of articles, news and cool companies, all focusing on how AI is being used to tackle global grand challenges.

Our aim is to make sure that you are always up to date with the most important developments in this fast-moving field.

Packed inside we have

- OpenAI takes image generation to the next level with DALL-E-2

- DeepMind discovers the optimal balance for large language models

- and, what AlphaFold did next ...

If you would like to support our continued work from £1 then click here!

Graham Lane & Marcel Hedman

Key Recent Developments

DALL-E-2, the future of AI research, and OpenAI’s business model

What: OpenAI have introduced a new version of DALL·E, a deep learning network that generates images from text descriptions. DALL-E-2 produces much more accurate images and has an uncanny knack of correctly interpreting the text prompts. What is more, the new version can edit images, for example adding a vase of flowers to a coffee table.

Key Takeaways: The capabilities of the new version have impressed even world-weary AI experts. One of the early users discusses the strengths and weaknesses of the new system. Despite these advances, however, OpenAI acknowledges that they are still struggling to address many know issues. For example, all nurses, personal assistants and flight attendants generated by the model are female, whereas the lawyers, CEOs and builders are all male. For this reason, the system is not yet available to the public. There is still a long way to go …

Blog: DALL·E 2

DeepMind has found a way to efficiently scale large language models

What: DeepMind researchers investigated the optimal relation between the size of an AI model (number of different parameters) and the size of the training data (the number of “tokens”). They found that current large language models are “undertrained” because the size of the model has been increased whereas the size of the training data has been kept constant. Through experimentation they found that, for best results, the size of the model and the size of the training data should be scaled equally. They then trained a model, called Chinchilla, with the optimal ratio (using a smaller model but more training data) and found that it “significantly outperformed” well-known large language models on a range of tasks when both models were trained using the same amount of computing resource.

Key Takeaways: Large Language Models are criticised as being responsible for unsustainable levels of carbon emissions and being hugely expensive. In an attempt to address this, DeepMind has developed a way of finding the optimal balance between parameters and data that uses the least computing resource.

Paper: An empirical analysis of compute-optimal large language model training

What's next for AlphaFold and the AI protein-folding revolution

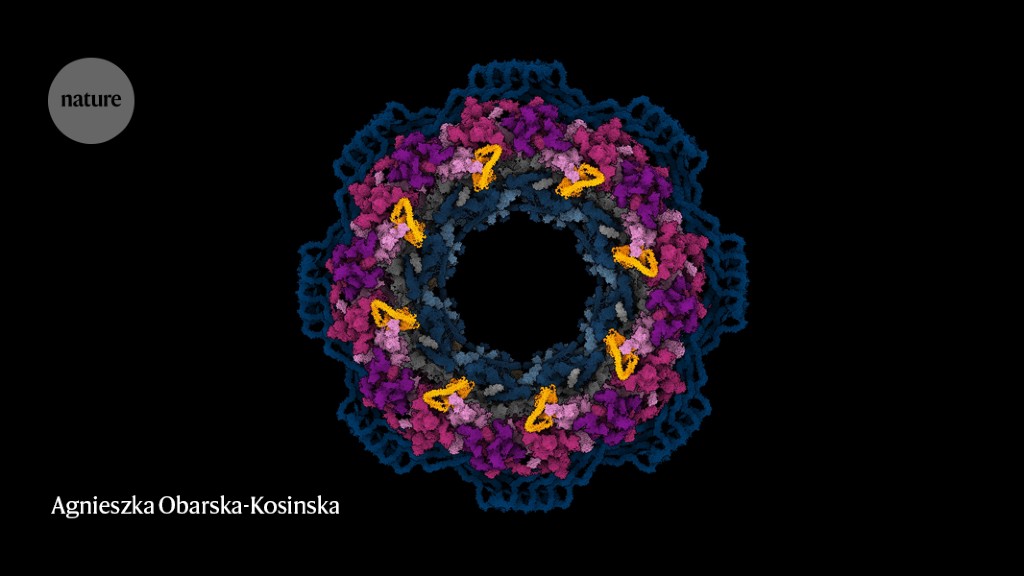

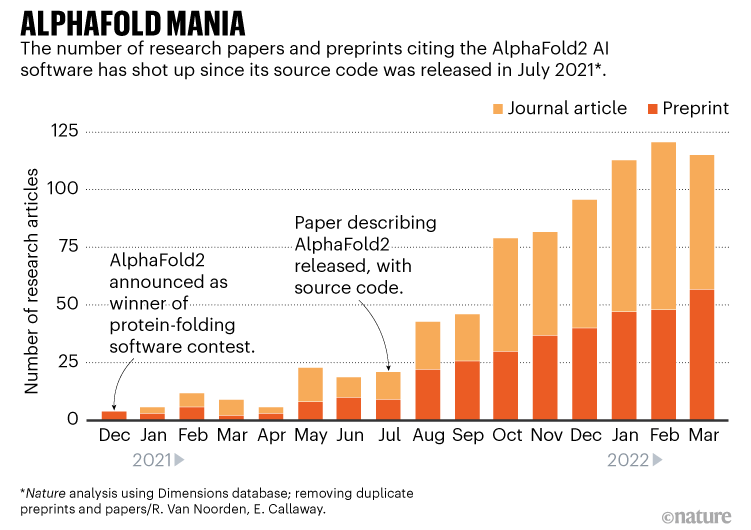

What: In July 2021 DeepMind released AlphaFold2 that predicts how proteins fold into complex 3D shapes. It soon announced that it had predicted the 3D structure of nearly every protein made by humans and has subsequently predicted nearly half of all known proteins. The technology is now being applied in a wide range of different areas, for example examining the Nuclear Pore Complex, and the structure of bacterial proteins that promote the formation of ice.

Key Takeaways: AlphaFold2 is best used to provide an initial approximation to be validated by experiment because it isn’t always fully accurate. The researchers working specifically on drug discovery seem a little hesitant in their assessment – one talks of “critical optimism” and another sees AlphaFold2 speeding up the experimental process rather than replacing it. Accuracy and details are important in developing new drugs.

In late 2021, DeepMind launched a spin-off, IsoMorphic Labs, to apply AlphaFold2 to drug discovery. Since then, the company has said little about its work. We wait to see how that is developing ...

AI Ethics

🚀 To truly target hate speech, moderation must extend beyond civility

A study has shown that white supremacists avoid vulgar and toxic language that trigger automated systems, instead emphasising their whiteness by appending “white” to many terms, such as “white children”, “white women” and “the white race".

🚀 New technology, old problems

The missing voices in Natural Language Processing.

🚀 California suggests taking aim at AI-powered hiring software

A proposed amendment to California's hiring discrimination laws would make it illegal for businesses and employment agencies to use automated-decision systems to screen out applicants who are considered a protected class.

Other interesting reads

🚀 Daedalus special edition - AI & Society

Daedalus is an open access journal published by the American Academy Of Arts & Sciences. The Spring 2022 edition is dedicated to the theme of AI and Society featuring 25 in-depth articles by leading figures in the field.

🚀 New ML models can predict adverse outcomes after abdominal hernia surgery with high accuracy

Procedure-specific risk calculator has potential to encourage changes in patient behaviors before surgery to improve success in abdominal wall reconstruction operations.

🚀 What AI can do for climate change, and what climate change can do for AI

To tackle the climate crisis, AI is becoming more open and democratic.

Cool companies found this week

Health

Diligent - provide robots to support patient healthcare teams. Their robot called Moxi has been the feature of numerous promotional videos. The company has raised $30 million in round B funding.

Finance

Lucinity - provides a system that combines human expertise and AI technology to fight against money laundering. It has been recognised as one of 10 startups riding the wave of AI innovation and last received venture funding of $6 million in July 2020.

Data privacy

Lightbeam - provides a data privacy automation platform using AI to identify personal data. The company has emerged from stealth with $4.5 million in seed funding.

And finally ...

AI/ML must knows

Foundation Models - any model trained on broad data at scale that can be fine-tuned to a wide range of downstream tasks. Examples include BERT and GPT-3. (See also Transfer Learning)

Few shot learning - Supervised learning using only a small dataset to master the task.

Transfer Learning - Reusing parts or all of a model designed for one task on a new task with the aim of reducing training time and improving performance.

Generative adversarial network - Generative models that create new data instances that resemble your training data. They can be used to generate fake images.

Deep Learning - Deep learning is a form of machine learning based on artificial neural networks.

Best,

Marcel Hedman

Nural Research Founder

www.nural.cc

If this has been interesting, share it with a friend who will find it equally valuable. If you are not already a subscriber, then subscribe here.

If you are enjoying this content and would like to support the work financially then you can amend your plan here from £1/month!