Welcome to Nural's newsletter where you will find a compilation of articles, news and cool companies, all focusing on how AI is being used to tackle global grand challenges.

Our aim is to make sure that you are always up to date with the most important developments in this fast-moving field.

Packed inside we have

- Call for "community norms" to regulate AI

- Microsoft integrating powerful AI into user-facing software

- and AI trained with diverse partners works better with humans

If you would like to support our continued work from £1 then click here!

Graham Lane & Marcel Hedman

Key Recent Developments

The time is now to develop community norms for the release of foundation models

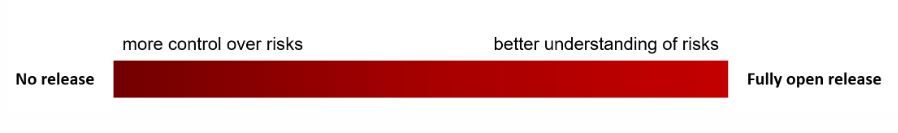

What: “Foundation models” is a term that refers to huge neural networks developed by large tech companies for tasks such as natural language processing and generating images (e.g. GPT-3 and Dall·E 2). These can be used by a myriad of downstream applications, so any problems with the foundation models may be propagated far and wide. Academic researchers can help identifying and mitigating such problems but different companies, such as Google, Meta and Microsoft have different policies about releasing their models. The Centre for Research on Foundational Models at Stanford University has proposed “a review board to develop community norms and encourage coordination on release of foundation models for research access”. Emphasising the problems of the current unmanaged landscape combined with the increasing power of the models, they state that “we must make decisions today not based on what exists but what could exist in the future.”

Key Takeaway: The researchers call for the “democratization” of foundation models through an “an institutional design for collective governance, marked by fair procedures and aspiring toward superior outcomes”.

Paper: https://crfm.stanford.edu/2022/05/17/community-norms.html

Microsoft Demos AI Development at Build, Using OpenAI Codex

What: OpenAI developed Codex, a system that translates natural language into computer code. Based on this, Microsoft developed Copilot, which can “suggest additional lines of code and functions” to developers. Copilot was launched to a limited number of testers in summer 2021. In a recent update, Microsoft stated that it is now being used by over 10,000 developers, producing an average of about 35% of their code. Codex and Copilot will be made more generally available in the summer. Microsoft also demonstrated an agent for the game Minecraft that can generate code based on written instructions.

Key Takeaways: The Minecraft agent provides an example of how AI might be integrated into a vast range of user-facing applications, such as Microsoft Excel. Furthermore, Microsoft is continuing to integrate no- or low-code solutions into its applications with the aim that such technology will “empower not just developers, but also creators”. Powerful AI is being incrementally integrated into user-facing applications and this will ultimately have a profound impact on how people interact with computers.

AI that adapts to diverse play-styles in a cooperative game may be a win for human-AI teams

What: In the future, humans and AI will need to partner in high-stakes jobs, such as performing complex surgery or defending against missiles. However, research indicates that humans often do not like or trust their AI partners. Using reinforcement learning, AI models are trained to maximise a particular objective but this may not be appropriate for collaborative undertakings. Instead researchers trained an AI agent to play a collaborative game and gave it an additional objective of identifying the training style of its AI training partner (which was varied). The objective was to develop an AI agent that is good at playing collaboratively with different play styles.

Key Takeaway: The AI agent that was trained with diverse AI training partners performed better than those that were trained with a single partner. This diverse model has not yet been tested with human partners. However, both DeepMind and Facebook have independently carried out similar research with similar results. The DeepMind research did study human partners and reports that they demonstrated “a strong subjective preference” to partnering with a diverse-trained AI agent.

DeepMind paper: Collaborating with Humans without Human Data

Facebook paper: Trajectory Diversity for Zero-Shot Coordination

AI Ethics

🚀 China and Europe are leading the push to regulate A.I. — one of them could set the global playbook

🚀 AI should be recognized as inventor in patent law

🚀 How amalgamated learning could scale medical AI

Other interesting reads

🚀 AI can help fight climate change—but it can also make it worse

🚀 Novel AI algorithm developed for assessing digital pathology data

🚀 How AI is improving the web for the visually impaired

Cool companies found this week

Health

MammoScreen - provides an AI-based system that scans 2D and 3D mammograms generating a score indicating the likelihood of breast cancer and highlighting potentially cancerous areas. The system has already gained U.S. FDA approval and the company has now raised $15 million in round B funding.

Climate

AiDash – has launched a new satellite and AI-powered software-as-a-service that integrates data from weather forecasts, highly accurate disaster models and precise asset data. The service constructs a digital twin for predicting and responding to the anticipated impact before, during and after a significant disaster or extreme weather event. This follows the company raising $27 million in round B funding in September 2021.

Research

Code Ocean - claims a first-of-its-kind Software as a Service research platform promoting scientific collaboration and discovery in areas such as AI and ML. It is based on the concept of open science and allowing for easy migration of code and data across platforms. A spokesman hopes that the system ensures that “every paper will be reproducible”. The company has announced a $16.5 million series B funding round.

And finally ... some robots will do anything for publicity

AI/ML must knows

Foundation Models - any model trained on broad data at scale that can be fine-tuned to a wide range of downstream tasks. Examples include BERT and GPT-3. (See also Transfer Learning)

Few shot learning - Supervised learning using only a small dataset to master the task.

Transfer Learning - Reusing parts or all of a model designed for one task on a new task with the aim of reducing training time and improving performance.

Generative adversarial network - Generative models that create new data instances that resemble your training data. They can be used to generate fake images.

Deep Learning - Deep learning is a form of machine learning based on artificial neural networks.

Best,

Marcel Hedman

Nural Research Founder

www.nural.cc

If this has been interesting, share it with a friend who will find it equally valuable. If you are not already a subscriber, then subscribe here.

If you are enjoying this content and would like to support the work financially then you can amend your plan here from £1/month!